There was this event called Microsoft Ignite a couple of weeks ago and from a data perspective, it was packed with news. While most of the new stuff was unsurprisingly AI- and data-focused, there were also some new related horizons that Microsoft started to explore. Here are some highlights cherry-picked for you by our data and AI experts Samu Niemelä and Pontus Mauno.

Microsoft Fabric

First and foremost, Microsoft released General Availability for Microsoft Fabric. For us at Zure this was highly anticipated news as we have been working with the preview version of the product since this summer. Microsoft has developed Fabric rapidly, taking a nigh-unusable product from six months ago to something that can be actually used and developed. And of course, the work continues also after the GA announcement.

But what does it REALLY mean that MS Fabric is now generally available? The biggest thing I think is that there is a green light from Microsoft that MS Fabric is now, in their eyes, production-ready. So Microsoft provides SLAs for the workloads and provides support if needed. This might lower the threshold for organizations to start exploring and evaluating how it could be used in their environments.

The second thing is the pricing. There were already pay-as-you-go prices for the SKUs, but Microsoft released also reservation prices for the SKUs. If you have 24/7 operations up and running, these capacities can be bought in advance for a year. This gives a lot of flexibility for capacity management to acquire different capacities for different environments and workloads. Furthermore, as you can see on the pricing page, Fabric SKUs are not cheap and rival Dedicated Synapse pools in price.

Related to the GA announcement, there were also a lot of new features that were published in Ignite for Fabric. For example:

⭐ Git integration & deployment pipelines took a step forward and support now lakehouse and notebook items. While still not perfect, they now offer a rudimentary CI/CD workflow to enable production-grade deployments. (See image for example)

⭐ Mirroring! Microsoft released also a new feature, which they are working on, called mirroring. This didn't get a lot of attention but is a really interesting new capability. The core idea is to enable real-time sync between different databases and Fabric with Change Data Capture technology. This limits the need for individual load processes from different sources and instead creates a replica to do cross-database queries straight from the portal. It was also interesting that the initial list of the supported databases for this feature included Snowflake and MongoDB with CosmosDB and Azure SQL DB. Let's keep an eye on this one!

⭐ Buzzword of the whole Ignite was Copilot and Fabric wasn't spared from it either. Microsoft announced that copilots are going to be rolled out for every workload inside Fabric meaning that there is going to be its own copilot for Power BI, Data Factory, or Notebooks. These are rolled out in different phases and according to the documentation, everybody has access to them in March 2024.

Copilot

Like it was mentioned in the previous section, one word to describe this year's Ignite would be copilot. It tells something that the Book of News has its own section for Microsoft Copilot these days. The main idea seems to be that if it is a Microsoft product, it has its own copilot.

Copilot for Azure

Copilot for Azure is now in preview and it was introduced in Ignite. It uses large language models to create, configure, discover, and troubleshoot Azure services with natural language. The user interface looks to be the same as in other Microsoft copilots bringing a chat sidebar that can be used to interact with the copilot. Microsoft announced that the copilot is going to be available not only in the portal but also in CLI and mobile apps.

This can be a useful tool for the lone rangers in small companies who are doing everything in the IT section. Using the copilot as a sparring tool to create new and optimize the existing solutions inside the Azure cloud can minimize the second-guessing of the developer. Copilot works as a team for the individual making sure that the implemented solutions are done with the best practices without going over multiple documents.

Copilot for Azure can also work as a more interactive learning tool to increase knowledge for example to on-prem IT admins who are leaping over to the Azure cloud side. Microsoft Learn and documentation are good places to get more information, but they lack the interactive aspect. With copilot, in theory, you could create a learning path and have personal assistance to ask questions or verify things on the fly. Interesting to see how it goes forward and how it works in practice.

Azure AI

New Azure OpenAI models

Azure OpenAI is launching the new OpenAI models GPT-4 Turbo, GPT-3.5 Turbo 16k, and DALL-E 3 to the Azure service as well. Furthermore, Microsoft promised to follow suit with a public preview of GPT-4 Turbo Vision before the end of this year, enabling usage of images with GPT-4 (as is already possible with OpenAI itself). The new models provide bigger token limits for contexts, cheaper pricing compared to their predecessors, and a newer context cut-off point of Spring 2023.

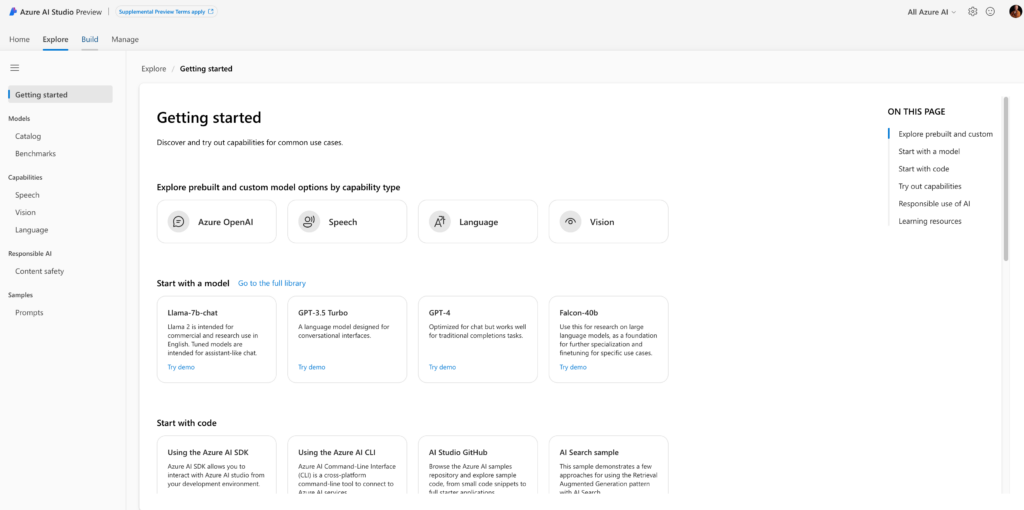

Azure AI Studio

On the tooling front, Microsoft also published the Azure AI Studio preview to replace the previous OpenAI Studio and continues on the path to converge OpenAI and ML management in Azure to a single studio. The promise is that Azure AI Studio would become a one-stop shop for developing and managing AI solutions with capabilities to build, test, and deploy pre-trained and custom models. Other capabilities in the Studio are LLM evaluation and prompt orchestration for applications.

If the promises for AI Studio are delivered, it should make life managing and running AI solutions and models (LLM or other) much easier. The addition of LLM evaluation should also make delivering trustworthy and manageable LLM solutions more plausible. Plus, the in-built enterprise-grade security and a shared development environment should appeal to larger development teams running large AI solutions who want to make their life simpler.

Customer Copyright Commitment Expansion into Azure AI

For the more legally oriented, Microsoft also published reassurance through updating their Customer Copyright Commitment (ie Microsoft's commitment to defend commercial customers and pay for any adverse judgments if they are sued for copyright infringement for using the outputs generated by Azure OpenAI Service) and it's related list of required mitigations for the commitment to be valid. For example, metaprompts must be managed properly as well as proper testing and evaluation.

Azure Cognitive Search transforms to Azure AI Search

Azure Cognitive Search was taken into Microsoft´s famous name blender and was renamed into Azure AI Search. In Ignite, it was also released that vector search and semantic ranker (previously semantic search) are generally available.

Vector search is a vector database solution that represents documents in vectors instead of plain text. This can speed up and bring more precision to the search experience when different datasets are normalized in vectors. Zure tested it out back in August and a new blog is coming soon.

Furthermore, the preview of Integrated Vectorization was also announced, which is a tool to automate some parts of the vectorization process that before had to be handled manually. Our initial testing shows some promise here in expediting for example RAG solution development!

Microsoft tailor-made chips

Microsoft is aiming to free itself from the overheated GPU markets by starting to produce its own custom-designed in-house AI chips: the Azure Maia 100 AI Accelerator and the Azure Cobalt 100 CPU. Maia chips are designed to train and run AI models while Cobalt 100 is designed to run general-purpose workloads.

Microsoft announced that they are starting to roll out these chips into Azure data centers yearly next year, specifically to power Azure OpenAI Service and different Copilots. As other details are still a little bit up in the air, it remains to be seen if these chips can help with the computing bottlenecks currently limiting the usage of many AI services.

Azure

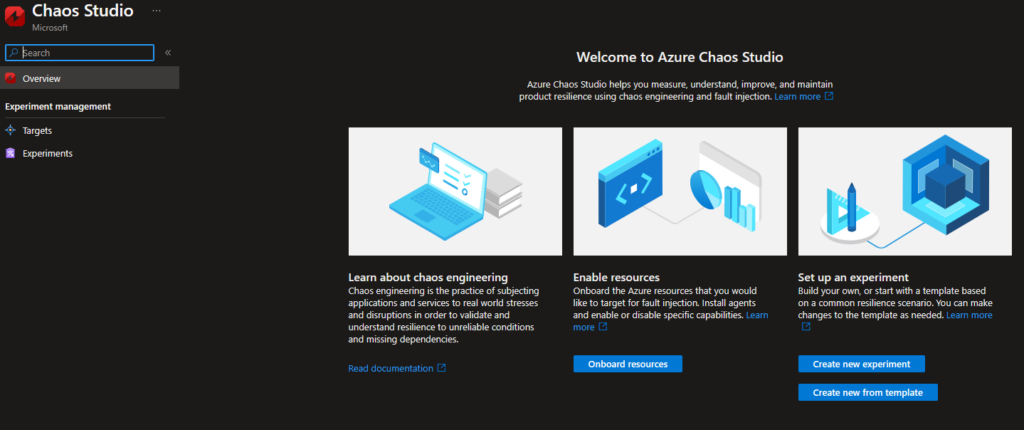

Azure Chaos Studio

Azure Chaos Studio was also released generally available in this year's Ignite. Chaos Studio enables users to create real-life outages that simulate faults that have an effect on applications. These experiments as an output can create actions to increase the application´s resilience in unexpected situations.

With Chaos Studio, the planned backup and fault toleration actions, that are created for the applications, could be tested out in a controlled real-life simulation. So what really happens for your application when an availability zone goes down or CPU spikes unexpectedly? This is an interesting service that can bring new insights from the existing solution.

Recap

All in all, we feel that in addition to the obvious Copilot spam and the expected Fabric GA announcement, Ignite provided us with several welcome updates related to developing more capable AI solutions (with GPT-4 Turbo and the upcoming Vision capability). While the focus will obviously be on Fabric for data developers and Copilots for the more business-minded people, we think that the Azure AI Search improvements (especially related to vectorization) will also be important. This is especially true in the near future when enterprises start to tackle the issue of how to enable searching the vast mountains of unstructured data they possess. Furthermore, as data people, we think the synthesis of the different data platforms and AI solutions is a development surely worth following!