Migrating BizTalk flows to Azure is not always a straightforward task. While some flows are fairly easy to move almost "as-is", others can present significant challenges.

In one of my projects, I had to deal with an integration between SAP and an external SFA (Sales Force Automation) system. The SFA system relied heavily on SQL and used a staging database for integration. In BizTalk, we naturally used the WCF-SQL adapter as our main tool of choice, along with a fair amount of XSLT mapping to convert data to and from SAP RFCs.

Given tight constraints on time and resources, especially around testing, we set out to explore how we could migrate this to a proper Azure-based solution.

Rethinking the Approach

On the SQL side, we relied heavily on user-defined table types when calling stored procedures, passing in typed sets of data to be upserted into staging tables. From experience, we knew that the SQL connector in Logic Apps doesn’t handle user-defined table types very well. So we quickly decided to use an Azure Function instead of the built-in connector.

I started by writing a basic Azure Function that could execute SQL statements on on-premises databases (one of the requirements for several integrations).

Once that was in place, I began migrating one of the first flows. But I hit a roadblock when I looked at the (rather large) XSLT involved. Migrating it seemed like a major challenge. That led me to explore other flows with similar requirements, and I started wondering whether there might be a better way forward.

Eureka Moment

I kept thinking about what the best solution could be. And then, one morning during my commute, I had a bit of a Eureka moment:

Instead of reimplementing the logic in Logic Apps or recreating the SQL mapping logic, I wondered, could I just use the same XML BizTalk already generates?

When you think about it, the XML schema (especially when you're just calling stored procedures) is actually quite straightforward to handle. Let’s look at a simple example:

<Request xmlns="http://schemas.microsoft.com/Sql/2008/05/TypedProcedures/dbo">

<CreateCustomers>

<CustomersTVP>

<CustomerType xmlns="http://schemas.microsoft.com/Sql/2008/05/Types/TableTypes/dbo">

<Row>

<CustomerId>1</CustomerId>

<Name>Jane Doe</Name>

<Email>jane@example.com</Email>

</Row>

<Row>

<CustomerId>2</CustomerId>

<Name>John Smith</Name>

<Email>john@example.com</Email>

</Row>

</CustomerType>

</CustomersTVP>

<CreatedBy>admin_user</CreatedBy>

</CreateCustomers>

</Request>

This XML calls a stored procedure named CreateCustomers. It has two input parameters:

CustomersTVP: a user-defined table type called CustomerType.

CreatedBy: a simple string used for logging or audit purposes.

Parsing the XML

The XML is parsed using LINQ to XML, which makes it easy to extract values and map them to SQL input parameters. In this post, I’ll highlight a few key parts of the code and explain how they work. For our use case, we exclusively use stored procedures. While the BizTalk schema also supports inline SQL statements, that feature is not covered in this blog post.

1. Stored Procedure(s)

First step is to extract the stored procedures we need to call. That can be done with the following line of code:

var spElements = xmlDoc.Root?

.Elements()

.Where(e => e.Name.Namespace.NamespaceName.Equals("http://schemas.microsoft.com/Sql/2008/05/Procedures/dbo"))

.ToList();

It just takes all elements directly under the root node (<Request xmlns="http://schemas.microsoft.com/Sql/2008/05/TypedProcedures/dbo">) and selects all that have the namespace specific for stored procedures. That should give us a list of all the stored procedures we want to call.

2. Parameters

For each stored procedure we need to find out which parameters we need to add. This can be simple types or user-defined table types.

foreach (var spElement in spElements)

{

// Get the parameters for the stored procedure

foreach (var parameterElement in spElement.Elements())

{

string parameterName = parameterElement.Name.LocalName;

var tableTypeElement = parameterElement.Elements()

.FirstOrDefault(e => e.Name.Namespace.NamespaceName.Equals("http://schemas.microsoft.com/Sql/2008/05/Types/TableTypes/dbo"));

if (tableTypeElement != null)

{

string tableTypeName = tableTypeElement.Name.LocalName;

DataTable dataTable = await BuildDataTableFromTableType(sqlXmlRequest.ConnectionStringKey, tableTypeName, tableTypeElement, cancellationToken);

sqlStatement.Parameters.Add(parameterName, dataTable);

}

else

{

sqlStatement.Parameters.Add(parameterName, parameterElement.Value);

}

}

}

To check if a parameter is a user-defined table type, we can check if the child of the parameter element has the Table-Types namespace. To add that one, we need some extra parsing (which I will explain in the next step). The simple parameters can just be added by name and value.

3. User-defined table type

This step is a little bit tricky. Calling a stored procedure with a user-defined table type expects you to pass a DataTable. To be able to do that, I execute a query on my SQL server to get the definition of the user-defined table-type.

string query = $@"

SELECT

c.name AS ColumnName,

t.name AS DataType

FROM sys.columns c

JOIN sys.types t ON c.user_type_id = t.user_type_id

WHERE c.object_id = (

SELECT type_table_object_id

FROM sys.table_types

WHERE name = @TableTypeName)

ORDER BY c.column_id;";

With the result of this query I can easily add columns to my DataTable.

foreach (var row in result.First().Data)

{

string columnName = row["ColumnName"]?.ToString() ?? string.Empty;

string dataType = row["DataType"]?.ToString() ?? string.Empty;

dataTable.Columns.Add(columnName, GetTypeFromSqlDataType(dataType));

}

And after that we can add the rows to our DataTable.

foreach (var rowElement in tableTypeElement?.Parent?.Elements() ?? [])

{

DataRow dataRow = dataTable.NewRow();

foreach (var field in rowElement.Elements())

{

string fieldValue = field.Value;

Type columnType = dataTable.Columns[field.Name.LocalName].DataType;

if (string.IsNullOrWhiteSpace(fieldValue))

dataRow[field.Name.LocalName] = DBNull.Value;

else

dataRow[field.Name.LocalName] = Convert.ChangeType(fieldValue, columnType);

}

dataTable.Rows.Add(dataRow);

}

How you handle empties is totally up to you. In my case, I needed the DBNull.

Azure Function

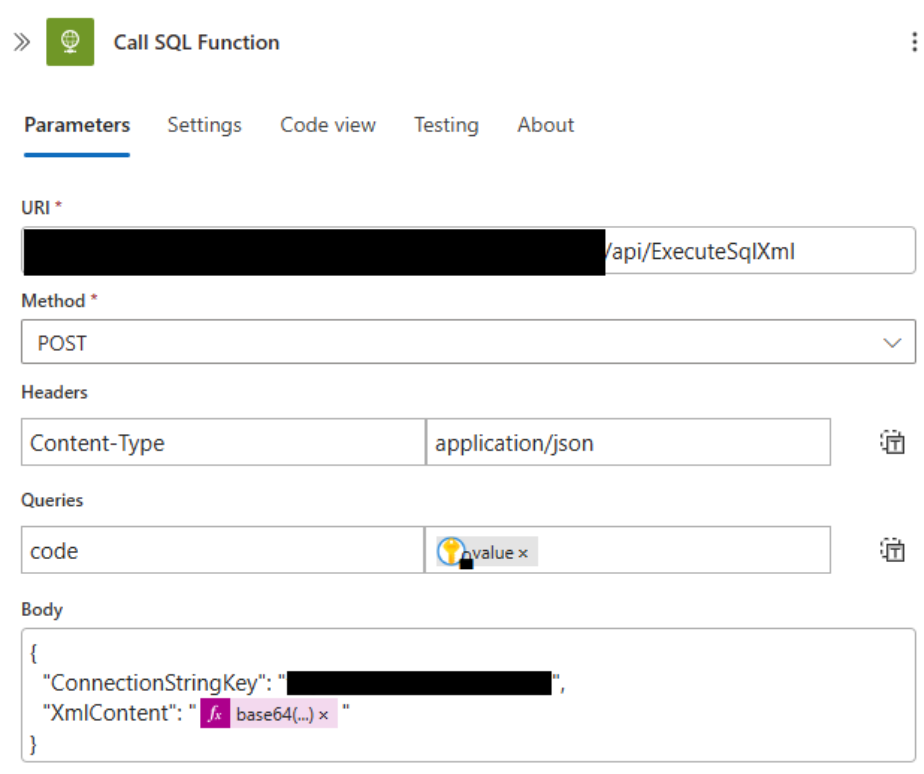

To bring everything together, I implemented an Azure Function that I call from within my Logic Apps.

One key design decision was to pass the ConnectionStringKey as a parameter. This allows me to select the appropriate database at runtime without modifying the function’s environment variables. Secrets are never hardcoded—the actual connection string is securely retrieved from Azure Key Vault.

This approach suited my use case, where I needed to support multiple databases (e.g. on-premises and in Azure). However, if you're working with a single, fixed database, you could just as easily configure the connection string as an environment variable instead.

The XmlContent parameter is flexible: it supports either a Base64-encoded XML payload or a reference to a blob containing the XML data.

Extra

At the time of writing, this is implemented as an HTTP-triggered Azure Function. In the future, I may also offer an asynchronous version, triggered via Azure Service Bus. This would be useful in scenarios where the SQL query takes longer to complete—especially considering the 2-minute timeout limit for HTTP actions in a consumption-based Logic App.

Conclusion

This isn’t how you’d build a new integration from scratch. But for migrating BizTalk flows, it works surprisingly well.

By reusing the existing XML that BizTalk already understands, we avoided redoing schemas, mappings, and logic. It let us move fast, keep things stable, and get off BizTalk without a full rewrite.

Not perfect, but for legacy migrations? It’s a solid shortcut.