This is a short series on Azure Landing Zones implemented with various levels of difficulty, in which we talk about Microsoft Cloud Adaption Framework and the different accelerators they provide, and compare them to the live-world experience of implementing an Azure Platform with the Everything-as-Code -approach. In first part we covered sandbox landing zones and some (opinionated) basic terminology and in second part we covered the second level of difficulty - using the ALZ-Bicep reference implementation from Microsoft to do a onetime-deployment.

In this third part, we will cover custom landing zone implementations as software projects with a lifecycle and change management. A word of warning, though - nothing in Cloud Adaptation Framework suggests that you should consider change management on this level, so keep that in mind before you go and have a dialogue with the local ITSM folks.

Configuring Landing Zone networking

Let's first address the elephant left in the room in the previous part of the series; configuring landing zone networking. The easy way to avoid a lot of frustration for every party involved is to stick only to subscription vending - you have a standardized landing zone implementation with minimal configuration, which creates a one-time deployment of landing zone resources. You provision a subscription, identity-related things, maybe a key vault and a bare-bones virtual network that is peered to the hub network.

But in some cases, you have a lot of (security-related) requirements that you, as the guy in the ivory cloud tower, need to impose upon others. Maybe you are working in some ultra-secure government thing, where everything that is not explicitly allowed needs to be blocked. Maybe all network traffic needs to be routed through a specific firewall (in which case you are in a bit of a pickle either way - if it's you who manages the firewall, or some other team that does that).

What this ultimately ends up to is that you somehow need to enforce subnet-level controls on the landing zone networks. Remember in the first part when I said that you want to think about policies as the first thing when designing landing zones? This is where it pays off. Enforce private endpoint network policies? Create an Azure Policy that does that. Enforce deny all rules at some priority? You guessed it.

The other way to do things is to simply own the configuration yourself, which is the route we went first. Creating a landing zone implementation that takes in virtual network and subnet(s) configuration as parameter files is not rocket science - it's just that the parameter files tend to grow lengthy. What's problematic with this approach is that if you want to enable self-service deployments, you also have automate those parameter changes - again, not a great technical feat, but things tend to get more complicated as they, well, grow more complicated.

There is a third way, and that is to let the workload team deploy the spoke virtual network on their own. There are some obvious challenges with this approach, as someone still has to peer the spoke network to the hub network, which requires both enough access and - one hopes - a crafty enough automation or tooling that picks up the creation of the new network and automatically peers it if certain conditions are met.

Such an approach would probably also require a luxury IP Management system that supports automation. Again, you might want to evaluate Azure Virtual Network Manager if you are just starting your Landing Zone implementation journey.

Landing Zones as software projects

Let's think about the Everything as Code -approach for a while; if you are implementing your platform with Infrastructure as Code, the changes are that development happens iteratively, as development usually does. This means that your code will have some kind of lifecycle. There are always new features to implement and technical debt to be paid. Another factor for iterative development is that Azure, as a cloud provider, is constantly evolving, as are the practices of running your platform on top of it. You get new services and new versions of services, and you might get new capabilities to your chosen tools, like the MS Graph provider for Bicep or deployment stacks.

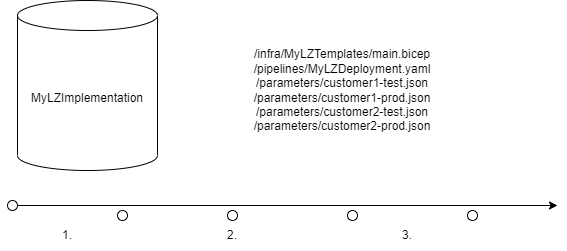

Consider a situation where you have your landing zone implementation in one repository - maybe the one where you bootstrapped ALZ-Bicep - and you've added some logic that utilizes the subscription vending module and passes parameters to it. You have more or less successfully deployed several landing zones and stored the parameter files used to the same repo [1 in the picture above]. Then you implement some more magic - say, deployment stack support to keep those devs from meddling with your lovingly crafted route tables - and merge the changes to your main branch [2].

You provision some more landing zones and add parameter files and maybe hotfix some things while you are at it [3]. And then some poor workload PO comes to the office, wiping sweat from his/her forehead, telling you that everything is gone. Some guy with too much ownership went and deleted everything, including your not-deployment-stacked landing zone resources, and they have been up all night restoring their clickops-resources, except for the virtual network and the configuration your deployment created.

This is the point where you find out that you configuration and your code have diverged from each other; the parameter files have not been touched in months, whereas the input parameters of your IaC implementation have. It is nothing that could not be fixed with some creative commit history inspection, but it is additional work nonetheless.

A case for Landing Zone lifecycle management

You might need to have the capability to re-deploy your Landing Zones. I can think of at least four different re-deployment scenarios.

- Redeploying an existing Landing Zone with exactly the same parameters ("Something hit the fan" -scenario).

- Redeploying an existing Landing Zone with different parameters (Like changing the owner or adding a new subnet with associated configurations to virtual network).

- Updating the Landing Zone to a newer implementation with exactly the same parameters.

- Updating the Landing Zone to a newer implementation with parameter changes (either the parameters change between Landing Zone versions, often paired with "while you are doing that, could you also add...").

These lead to the following kind of questions:

- How do you version your Landing Zone code? Do you do versioning in a repository with branching or tags, or do you push your code to some artifact storage and version it there?

- How do you store your workload parameters? In parameter files, or Azure DevOps variable groups, or in some configuration service?

- How do you version your workload parameters? Do you need to?

- How do you combine your Landing Zone code and the parameters? If you version both, do you do it in the same repository?

Each of these might or might not add complexity to your system design. I have not found the best practices for this kind of question. Accelerators don't typically have answers for lifecycle management, since accelerators are meant to accelerate you to a journey, not present a manageable solution.

We separated our code and configs into different repositories, and handle the dependencies with good old release branches in the code repo, and by having the deployment pipelines in the config repo - and a pipeline resource reference to a release branch in between.

Landing Zone implementation considerations

Even if the Landing Zone implementation usually deploys relatively few visible resources, we still had to make significant changes to the implementation during the first year of development. The network components and their parameterization have changed a lot, due to things like changing requirements.

I would suggest starting by really taking a long look at the naming standard your organization is using and spending more than a minute thinking about how to name the landing zone resources. Because if you don't, these things will return to bite your hind side sooner or later. Landing Zone implementation is a kind of reference architecture on which a lot of things depend on.

Say, you deploy a subnet for private endpoints or some form of self-hosted agents with the Landing Zone, and pretty soon you have a bunch of other deployments relying on that naming - whether they should or should not do that. Change the naming and deploy without notice, and experience the joy of getting nightly calls. Another source of endless agony is resources like key vaults with unique names and an amount of time that must pass before you can delete them.

You should also spend some time thinking about the boundaries of responsibility between your centralized team and project teams. Who's responsible for what deployment and configuration? Do you lock down your Landing Zone resources so the project teams can't change them (at least manually)? Is it convenient to have centrally managed Landing Zone configs in the first place, if the project team does not have a solid and production-ready architecture for their network configs? By keeping all these things centrally managed, you can certainly make yourself a bit too popular and busy. By giving too much control outside, you probably won't be able to re-deploy your centralized stuff without a lot of tinkering.

The Holy Grail of re-deployability would probably be some kind of automated self-service (developer) portal which allows changing the minimal configuration and is heavy on the convention side. Maintaining a codebase that is still developed and ensuring the correct lifecycle controls is no small feat and you should expect to hire a large team to do that.

Editor's note: Microsoft Ignite happened while editing this part, and along came the Microsoft Platform Engineering guidance. Coincidence or.... Coincidence?

In the fourth and final part of the series we are going to take a brief look on different accelerators that can be found from Cloud Adoption Framework documentation and talk about special things like deploying workload resources as part of landing zone (spoiler alert; don't) and data landing zones.