In this post, you can learn what lives inside the EU AI Act, what kind of things relate to its existence, and how you can find the information needed to cover your company's AI-powered journey as the timer of compliance looms in front of us.

In March 2024 the EU AI Act, the first-ever legal framework for artificial intelligence, was made a reality. It's important for Zure as a developer of AI solutions and to our customers to understand what is required from us to adhere to upcoming regulations.

Timeline of the EU AI Act

To get a better understanding of how long the AI Act has been in planning and how the rise of Chat-GPT by OpenAI aligns with it:

2018 Dec - Coordinated Plan for AI started

2021 Apr - Proposed AI Act

2022 Nov - Chat-GPT launched

2023 Dec - Political Agreement

2024 Jan - AI innovation package for startups and SMEs

2024 Mar - EU AI Act finalized

2024 Aug - EU AI Act Journal published + enforced

AI Innovation for all

Support innovation while mitigating the harmful effects of AI systems in the EU.

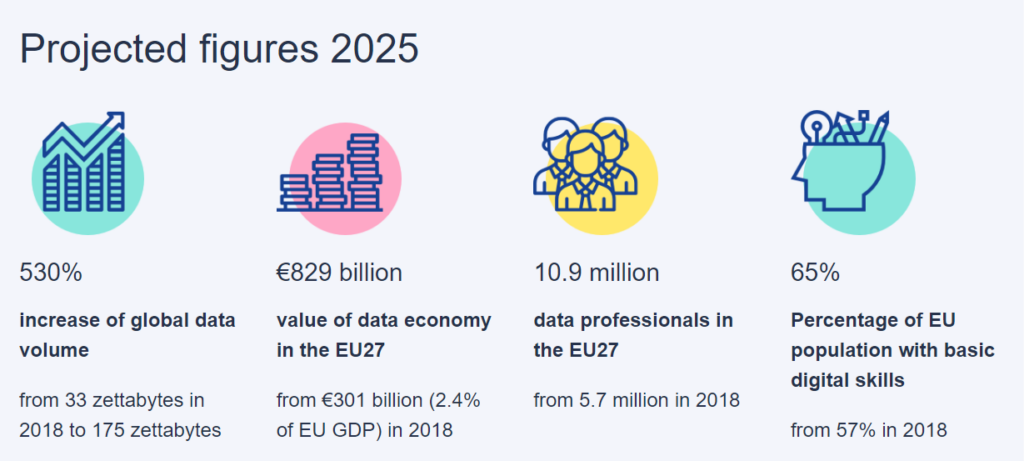

The AI Innovation Package by the EU Commission was created to support AI startups and small-to-medium-size enterprises (which cover ~99% of businesses) in funding and developing AI-based solutions. Europe wants to be a world class supercomputing ecosystem. The AI Innovation Package invests 4 billion Euros until 2027 for public and private sectors. Most of the current and projected implementations are GPAI (General Purpose AI) powered solutions, as Chat-GPT, Google Gemini, and Dall-E are also categorized.

GPAI refers to image and speech recognition, audio and video generation, pattern detection, question answering, translations, and similar.

EU wants to serve companies with AI Factories. EU supercomputers to facilitate AI development, offering access to AI-dedicated supercomputers and a one-stop shop for startups. With Common European Data Spaces being made available to the AI community, it is a needed resource for training and improving models.

EU is creating a single market for data

Common European Data Spaces

Being open to the participation of all organizations and individuals, creating a common data space sounds like a useful plan in many ways. Improving healthcare, generating new products and services, reduce the costs of public services, to name a few from European strategy for data. Creating a safe and secure infrastructure and parties to govern and develop this will be a huge undertaking. There already exists DGA (Data Governance Act) its goal of easier data sharing in a trusted and secure manner and GDPR (General Data Protection Regulation) to protect our precious personal data.

Goals simplified:

- Data can flow within the EU and across sectors, for the benefit of all

- European rules, in particular privacy and data protection, as well as competition law, are fully respected

- The rules for access and use of data are fair, practical, and clear

- Investing in next-generation tools and infrastructures to store and process data

- Joining forces in European Cloud capacity

- Pooling European data in key sectors, with common and interoperable data spaces

Why all the rules, EU?

"The AI Act ensures that Europeans can trust what AI has to offer."

It is challenging to determine the reasoning behind an AI system’s decision or prediction and the actions it takes. This lack of transparency can make it difficult to evaluate whether an individual has been unfairly treated, such as in employment decisions or applications for public benefits.

While many AI systems present minimal risk and can help tackle numerous societal issues, some AI systems introduce risks that need to be managed to prevent negative consequences. Current laws offer some level of protection, but they fall short in addressing the unique challenges posed by AI systems.

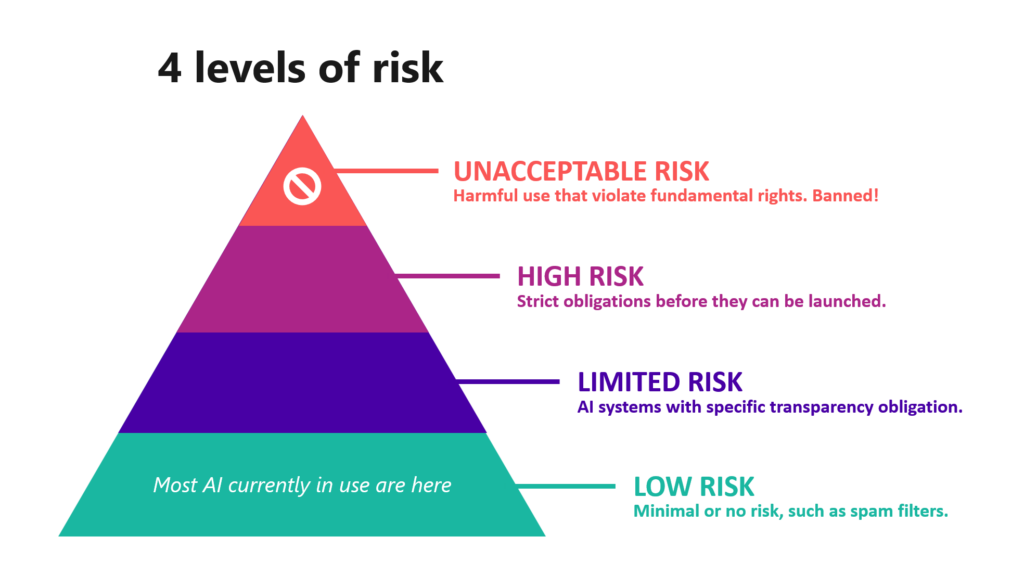

4 levels of risk

- Unacceptable risk: for example, AI systems that allow “social scoring” by governments or companies are considered a clear threat to people's fundamental rights and are therefore banned.

- High risk: high-risk AI systems such as AI-based medical software or AI systems used for recruitment must comply with strict requirements, including risk-mitigation systems, high-quality of data sets, clear user information, human oversight, etc.

- Limited risk / Specific transparency risk: systems like chatbots must clearly inform users that they are interacting with a machine, while certain AI-generated content must be labeled as such.

- Minimal risk: most AI systems such as spam filters and AI-enabled video games face no obligation under the AI Act, but companies can voluntarily adopt additional codes of conduct.

Read all the details from the nicely structured official AI Act Explorer.

Are copyright matters covered in the AI Act?

Yes, regulated.

“Model providers additionally need to have policies in place to ensure that that they respect copyright law when training their models.” (source)

What about the environment?

Yes, regulated.

“…reduction of energy and other resources consumption of the high-risk AI system during its lifecycle, and on energy efficient development of general-purpose AI models.” (source)

Providers of GPAI models, trained on large data amounts and prone to high energy consumption, are required to disclose energy consumption.

Get ahead of the game and develop by the regulations

August 1st was the day that Official Journal was published and the timer started ticking. Below is the simple timeframe on how regulations are becoming enforced. If your company wishes to be ahead and voluntarily adopt the key obligations, you can join the AI Pact, or at least adopt the mindset in your company.

- Prohibition of unacceptable risks --> 6 months, ~February 2025

- Finalized Code of Practice for providers of GPAI models --> by April 2025

- Governance and obligations for GPAI --> 12 months, ~August 2025

- Obligations for high-risk AI systems --> 36 months, ~August 2027

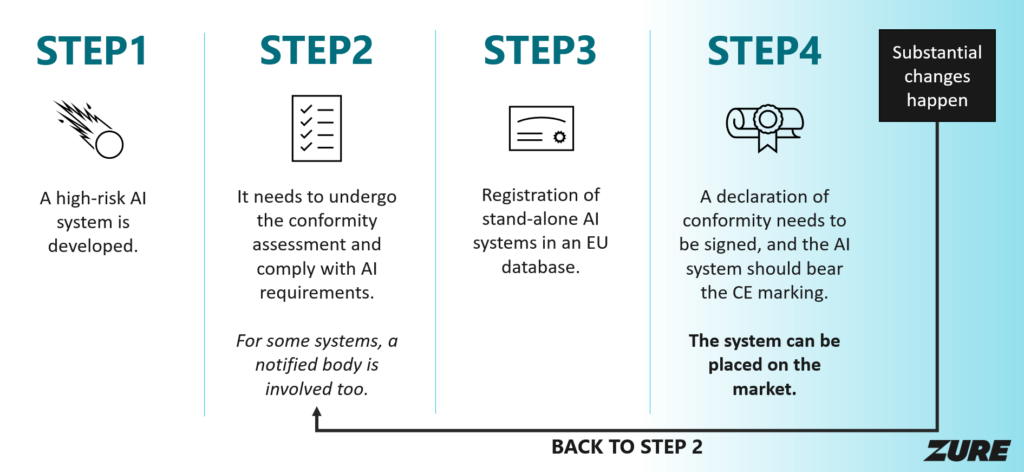

How does it work in practice for providers of high-risk AI systems?

“Once an AI system is on the market, authorities are in charge of market surveillance, deployers ensure human oversight and monitoring, and providers have a post-market monitoring system in place. Providers and deployers will also report serious incidents and malfunctioning.” (source)

Step 1 - A high-risk AI system is developed.

Step 2 - It needs to undergo the conformity assessment and comply with AI requirements.

Step 3 - Registration of stand-alone AI systems in an EU database.

Step 4 - A declaration of conformity needs to be signed, and the AI system should bear the CE marking. The system can be placed on the market.

If substantial changes happen, return to step 2.

Who bears the responsibility for legal obligations?

'Deployer' and 'Provider' are defined in the documentation of the act. They can also overlap in a confusing way and make it difficult to say as a software company who develops a solution using an existing AI model product to a customer who owns and releases the solution to the EU market. In short, it is described as:

- Providers ensure the AI system is compliant and safe before it hits the market.

- Deployers ensure the AI system is used correctly and safely in the specific context.

- Sometimes, an entity can be both, depending on how the AI system is implemented.

It requires some very close in-context interpreting to figure it out in your project. We will have to carefully examine and agree on these things with our customers so that everyone knows their legal obligations. When you see the penalties in the next chapter, you understand why.

I recommend these two excellent posts with visual tables on how to determine the roles and responsibilities:

The EU AI Act – Know the rules and learn how to apply them. (kpmg.com)

'Provider' or 'Deployer' of an AI System under the EU AI Act (mishcon.com)

Penalties for infringement

Prohibited practices or non-compliance: Up to €35m or 7% of annual turnover

Non-compliance of any of the other requirements: Up to €15m or 3% of annual turnover

Supply of incorrect, incomplete, or misleading information: Up to €7.5m or 1.5% of annual turnover

Some sizable ramifications for not complying with regulations. We will see how these are enforced in the end and if the future process is easy enough to avoid penalties. Speaking of enforcing...

AI Office – “Enforcers of AI”

The European AI Office, established in February 2024 within the Commission, oversees the AI Act’s enforcement and implementation with the member states.

It aims to create an environment where AI technologies respect human dignity, rights, and trust. It also fosters collaboration, innovation, and research in AI among various stakeholders.

International dialogue and cooperation on AI issues, need for global alignment on AI governance. European AI Office strives to position Europe as a leader in the ethical and sustainable development of AI technologies.

Experts of the EU AI Act

Carles Sierra is a scientist, research professor, and the President of The AI Board.

The other 8 members are also professors of different areas such as Cognitive Systems, Robotics, Engineering, Computer Science, Philosophy, and others. (Read more)

The AI Board has extended tasks in advising and assisting the Commission and the Member States.

The Advisory Forum will consist of a balanced selection of stakeholders, including industry, start-ups, SMEs, civil society and academia. It shall be established to advise and provide technical expertise to the Board and the Commission, with members appointed by the Board among stakeholders.

The Scientific Panel of independent experts supports the implementation and enforcement of the Regulation as regards GPAI models and systems, and the Member States would have access to the pool of experts.

In summary

It's taken a while to read through a lot of different sites on this ONE topic of "EU AI Act". It is quite easy to generalize it as just another regulation, but there are so many things to consider in practice. I hope I gave a bit of insight and reasoning to it too. The drive for common data, helping even the smallest start-ups to use these AI models and tools amongst the big-player megacorporations is great!

It is important to also be responsible, ethical, and human-centered when developing any AI-powered service or product. We will see in the future how well this Act works out and how difficult it will be to go through the process of registering our solutions to be used within the EU market.

Thanks for reading and make sure to check below for all the sources and extra helpful content!

Top 3 recommended links

- EU AI Act Journal structured for easy reading: AI Act Explorer

- Explanation on Provider and Deployer responsibility by KPMG: The EU AI Act – Know the rules and learn how to apply them. (kpmg.com)

- Printable cheat sheet post by Oliver Patel: (3) Post | Feed | LinkedIn

Read more about EU AI Act

- Main EU AI Act article: AI Act | Shaping Europe’s digital future (europa.eu)

- AI Innovation Package: Commission launches AI innovation package (europa.eu)

- Coordinated Plan on Artificial Intelligence: Coordinated Plan on Artificial Intelligence | Shaping Europe’s digital future (europa.eu)

- Study supporting the impact assessment: https://digital-strategy.ec.europa.eu/en/library/study-supporting-impact-assessment-ai-regulation

- FAQ: New rules for AI https://ec.europa.eu/commission/presscorner/detail/en/QANDA_21_1683

- European AI Office: European AI Office | Shaping Europe’s digital future (europa.eu)

- Common Data Spaces: Common European Data Spaces | Shaping Europe’s digital future (europa.eu)