This is a short series on Azure Landing Zones implemented with various levels of difficulty, in which we talk about Microsoft Cloud Adaption Framework and different accelerators they provide, and compare them to live-world experience of implementing an Azure Platform with Everything-as-Code -approach. In first part we covered sandbox landing zones and some (opinionated) basic terminology.

In this second part we cover the second level of difficulty - using the ALZ-Bicep reference implementation from Microsoft to do a onetime-deployment.

Level 2: Basic Landing Zones, one-time deployment

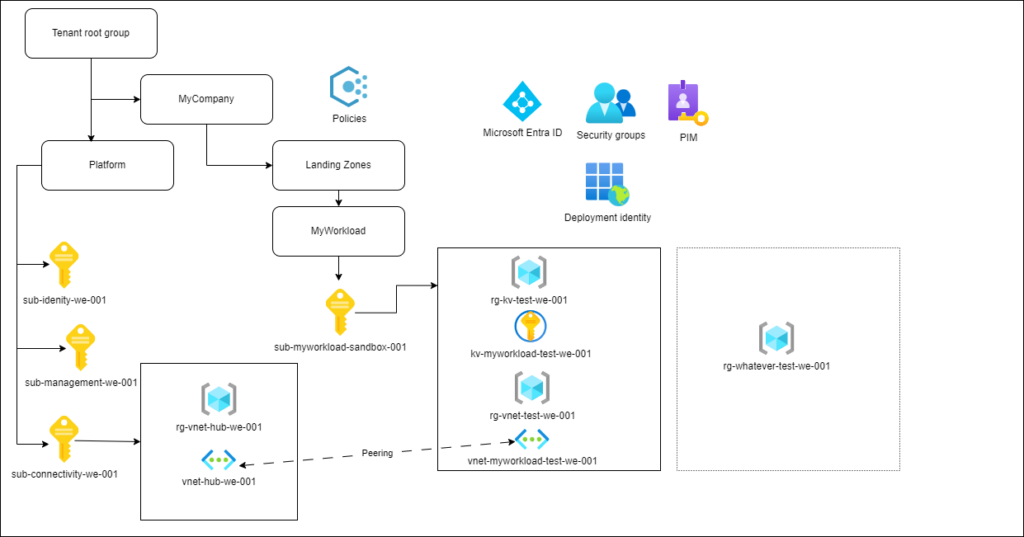

The second level is pretty much what the ALZ-Bicep implementation does; it deploys one or many Landing Zones with custom parameters, and places them in the management group hierarchy. At this level, you'll add the networking, either as the classic Hub & Spoke -model, or the newer Virtual WAN. That is if you need a network, and you should think hard and long if you do. I know that various Azure resource security baseline documents suggest you integrate your stuff into virtual networks and use a private link. It's music to the ears of security people coming from an old-premises works, but deploying networks for workloads makes things a lot more complicated to everyone involved. So think about your security requirements and things like zero trust, and make an informed decision.

The complexity on top of sandbox deployments comes from being able to deploy multiple workloads and Landing Zones (or environments) per workload. In other words, you'll have to add some more parameters to your implementation, both to be able to deploy separate Landing Zones and to be able to configure the resources you deploy into a Landing Zone. The ALZ-repository has orchestration modules that expose a hefty amount of parameters, with which you provision a spoke network, peer it to the hub network, and provision a hub route table. What's missing on this level is the subnet level configuration for the spoke network; we assume that someone or something else takes care of it.

Ideally, if you are doing your own Landing Zone implementation with the Everything-as-Code -approach, you want to simplify the parameters as much as you can, but still expose the essential parameters to be set on deployment time.

A minimal set of parameters could be something like:

| Parameter name | Usage |

|---|---|

| Owner | Owner of the Landing Zone, to be set as security group owner |

| Location | Azure region for Landing Zones |

| Network configuration | Just the virtual network level. |

| Tags | Tags to be applied to all resources |

| Keyvault | Keyvault configs like network and RBAC/access policies |

These are something you might need to set per Landing Zone, as at least virtual network addresses change for every environment, and could be stored as parameter files, Azure DevOps variable groups - or however you prefer to store your parameters.

Parameters that do not change per environment could be set either in parameter files or in a single shared pipeline variable file (or group).

| Parameter name | Usage |

|---|---|

| Workload name | Just the name that identifies the workload. Actual resource names should be constructed in the bicep implementation to enforce naming standard |

| Zone | If your management hierarchy is split to different zones like Online and Restricted Zones, create a set of parameter files and a variable file per zone |

| Other Stuff That Rarely Needs to Be Changed or Does Not Change Per Environment | We have a flag for decommissioning a set of Landing Zones here. |

For deploying the Landing Zone you might want to create an Azure Pipeline template that describes the deployment steps and takes in the name of the parameter file, or just the plain parameters for a single Landing Zone deployment, and then construct the main pipeline logic like you see fit. You can draw inspiration from the ALZ pipeline documentation. But if you drill further down the sample pipeline, there are some things you'll need to address. For example, take a look at the deployment step for the spoke network:

<code>task: AzureCLI@2<br>displayName: Az CLI Deploy Spoke Network<br></code>name: create_spoke_network<br>inputs:<br>azureSubscription: $(ServiceConnectionName)<br>scriptType: 'bash'<br>scriptLocation: 'inlineScript'<br>inlineScript: |<br>az account set --subscription $(SpokeNetworkSubId)<br>az deployment group create <br>--resource-group $(SpokeNetworkResourceGroupName) <br>--template-file infra-as-code/bicep/modules/spokeNetworking/spokeNetworking.bicep <br>--parameters @infra-as-code/bicep/modules/spokeNetworking/parameters/spokeNetworking.parameters.all.json <br>--name create_spoke_network-$(RunNumber)

You can see the az deployment group command getting all the parameters from spokeNetworking.parameters.all.json. When thinking about the deployment flow logic for multiple workloads and Landing Zones, you'll need to come up with some kind of strategy for how to store and fetch parameters for multiple deployments. On this difficulty level, supplying the workload and Landing Zone environment name might be enough, so something like:

az deployment group create --resource-group $(SpokeNetworkResourceGroupName) \

--template-file infra-as-code/bicep/modules/spokeNetworking/spokeNetworking.bicep\

--parameters@infra-ascode/bicep/modules/spokeNetworking/parameters/spokeNetworking.$(WorkloadName).$(environment).parameters.all.json \

--name create_spoke_network-$(RunNumber)

Where pipeline takes in parameters WorkloadName and environment per run (say, 'MyWorkload' and 'dev'), and this might be enough if your use case is to deploy the Landing Zone resources once and forget about them. But for any other scenario, you'll need to build some lifecycle management and separation on top of the Landing Zone implementation, which will bring us to level 3 on the third part of the series.

For the whole experience of provisioning a new Hub and Spoke environment with the ALZ-Bicep reference implementation, I suggest you take a look at the step-by-step example by Toni Pehkonen, where he goes through the experience of provisioning a new repository with the ALZ Powershell command. While the example does not really cover the usage of workload spoke module explained above, it gives a good view on the complexity of configuring a whole new Hub environment - or a platform, if you prefer that loaded term.

This is probably also a point where you have to make a decision on who's responsibility it is to handle the trickiest configuration - the above-mentioned subnet-level networking. The CAF documentation speaks about subscription vending as the process of handing out Landing Zones to workload teams and recommends that the workload teams should be granted autonomy to take care of their own networking.

This, essentially, does take a lot of the complexity out from Landing Configuration and might actually empower you as the Platform dude to be able to stick with handing out subscriptions with very basic identity-related things, essentially letting you do one-time deployments and skip all the following more complicated levels. So this would be a good point to have a governance and team maturity discussion with the stakeholder, agree on who's responsible for what, implement Azure Policies to enforce things that need to be enforced, and seal the deal with good old RACI-matrix.

One specific tools needs to be mentioned here, even if we haven't yet had time to give it a spin - Azure Virtual Network Manager might be something that could be utilized to lift that complexity from your configuration by allowing you to impose network security group rules on virtual networks.

In the third part of the series we are going to take a look at landing zones as software projects with change and lifecycle management, and finally some thoughts on landing zones with accelerators and special topics like data landing zones.