- Blog

#Security

Data Security Posture Management for AI

- 13/05/2025

Reading time 5 minutes

While security has always been a top priority in application development, the rapid growth of AI-enabled applications has made it even more critical. This is largely due to the accelerating pace of AI adoption. Furthermore, recently introduced regulations, such as the AI Act and NIS2, specifically address the use of AI-based applications, reinforced by potential local and industry-specific laws and restrictions. So, if your organization is planning to develop AI applications, are you fully aware of the security considerations required at every stage of the AI application development process?

By reading this blog, you can learn an overview of secure AI application development and what can be done to protect you and your users from possible security challenges during your journey in the world of AI.

Have you heard about Air Canada refund incident, which happened on February 2024? In the incident, Air Canada customer reportedly manipulated the company’s AI chatbot to obtain a refund larger than expected. Or Healthcare data compromise on September 2024, where the compromised AI algorithms were used for patient data analysis. That breach exposed sensitive patient information and highlighted the importance of securing AI systems and algorithms in healthcare.

News like the above just keeps rising, forcing organizations to take protective measures for AI-powered application or service usage. Do you know what kind of AI services your organization is currently using, and are there any protections in place to prevent such unfortunate events from happening in your environment?

Artificial intelligence has advanced greatly over the last 50 years, inconspicuously supporting a variety of corporate processes until ChatGPT’s public appearance drove the development and use of Large Language Models (LLMs) among both individuals and enterprises. Initially, these technologies were limited to academic study or the execution of certain, but vital, activities within corporations, visible only to a select few.

Recent advances in data availability, computer power, Generative AI capabilities, and the release of tools such as Llama 2, Character.AI, and Midjourney have raised AI from a niche to general widespread acceptance. These improvements have not only made Generative AI technologies more accessible, but they have also highlighted the critical need for enterprises to develop solid strategies for integrating and exploiting AI in their operations.

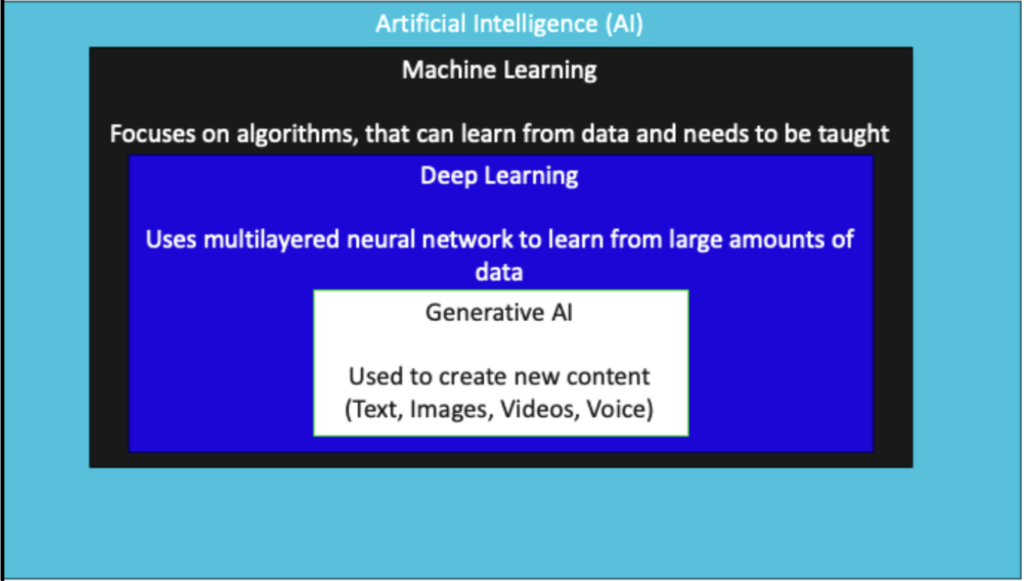

AI and Machine learning models explained:

Now that you’ve learned the basics of AI, you’re ready to create your own intelligent application using Azure’s native AI services. The business use case is set, the concept work is complete, and all necessary approvals are in place. It’s time to grab a cup of coffee and dive into the fun part – coding. But just as you’re about to start, the project manager interrupts with a straightforward question: “How do we begin?” You pause, realizing you’re not sure how to answer.

When does security come into play? It’s best to address security from the very beginning of your AI application development project. Here are the foundational steps:

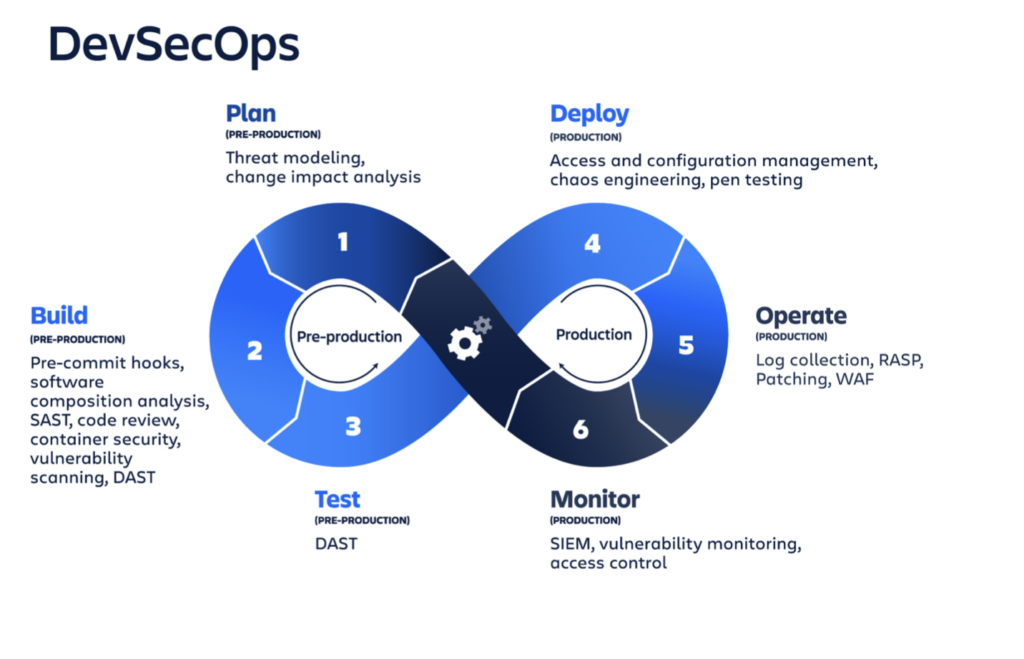

These steps align with the DevSecOps process, which integrates security into every phase of development. But how can you effectively implement this, and why is it essential? Let’s explore.

DevSecOps is a continuous process where you integrate security to be part of the SDLC. It can be considered a combination of tools and processes, for example, conducting Static Application Security Testing (SAST) or Software Composition Analysis (SCA) in the agreed stages of the development project. It does not cover only tools; it includes developer security awareness training for the project, including the nomination of Security Champions who promote the security mindset and support other developers with possible security issues. With solutions such as GitHub Advanced Security, you can easily integrate the application security testing process into the native developer workflow in Azure DevOps. It helps developers fix issues faster with code scanning autofix functionalities, detect leaked passwords with secret scanning, and save time with a regular expression generator for custom patterns, just to mention a few.

When it comes to the security of AI functionalities, it is highly recommended to conduct a risk assessment and threat model focused on the use case of the functionality; no matter if the AI model is intended to create material or process large amounts of data. The assessment should analyze the model architecture, possible training data and training methodologies, possible biases and limitations of the model, and user privacy aspects. It can all be included in the DevSecOps process of the development project.

Contact us to get started with our DevSecOps Champion program. In the program, we walk you through common application security vulnerabilities, teach your developer teams how to create threat models, and support you in choosing the right tools for the environment – just to mention a few. With our latest knowledge about AI application development, we can help you secure your AI application development projects in Azure.

Our newsletters contain stuff our crew is interested in: the articles we read, Azure news, Zure job opportunities, and so forth.

Please let us know what kind of content you are most interested about. Thank you!