- Blog

#Security

Streamlining Compliance with Microsoft Purview’s Alerting Features

- 20/02/2025

Reading time 7 minutes

“Phishing is the top digital crime type identified by the FBI” states the ENISA Threat Landscape 2023 — ENISA (europa.eu). It has now bee reported that the first case of simulated multi-person video conference with fabricated participants was used in an attack in Hong Kong. More about the case in Ars Technica’s article: Deepfake scammer walks off with $25 million in first-of-its-kind AI heist | Ars Technica. There is still room for doubt if this actually happened but here at Zure, we believe that this is within the realm of possibilities. Have you considered how to protect yourself against these kinds of risks?

There is a long history of phishing attacks. Everyone of us knows about malicious emails. Many have heard about sim swapping used to spoof identity of SMS sender or deepfake voice or video cloning. With SMS and Emails, it’s hard as an end-user to be entirely sure that the origin was legitimate. With video calls it’s even harder.

Phishing attacks have been around for at least two decades. Attacks have been actively used by nation-state-actors and criminals. Some of the attacks are clumsy, but recognizing an obvious scam often comforts us with false sense of safety which might make us less wary of the next attack. Simple attack can be easy to conduct but to make the barrier for evil deeds even lower, there are also PhaaS (Phishing as a Service) providers. Based on the very same ENISA threat landscape report mentioned earlier, you can buy capabilities for phishing attack with just $40. These capabilities contain pretty much everything you need, starting from contact lists of potential targets to capabilities to bypass spam filters and multi-factor authentication.

I would dare to say that you really need to practice recognizing deepfakes to be able to do that. But here are a few tips that you might use in a video conference:

In a real-time video conference, you would need to zoom in really close to surfaces to recognize AI-generated oddities. It might be hard, and the compressing of video, small screens or just bad camera could also generate some of the issues. Also, as we learn what kind of mistakes AI generates, the better AI becomes so it will be a long-running effort.

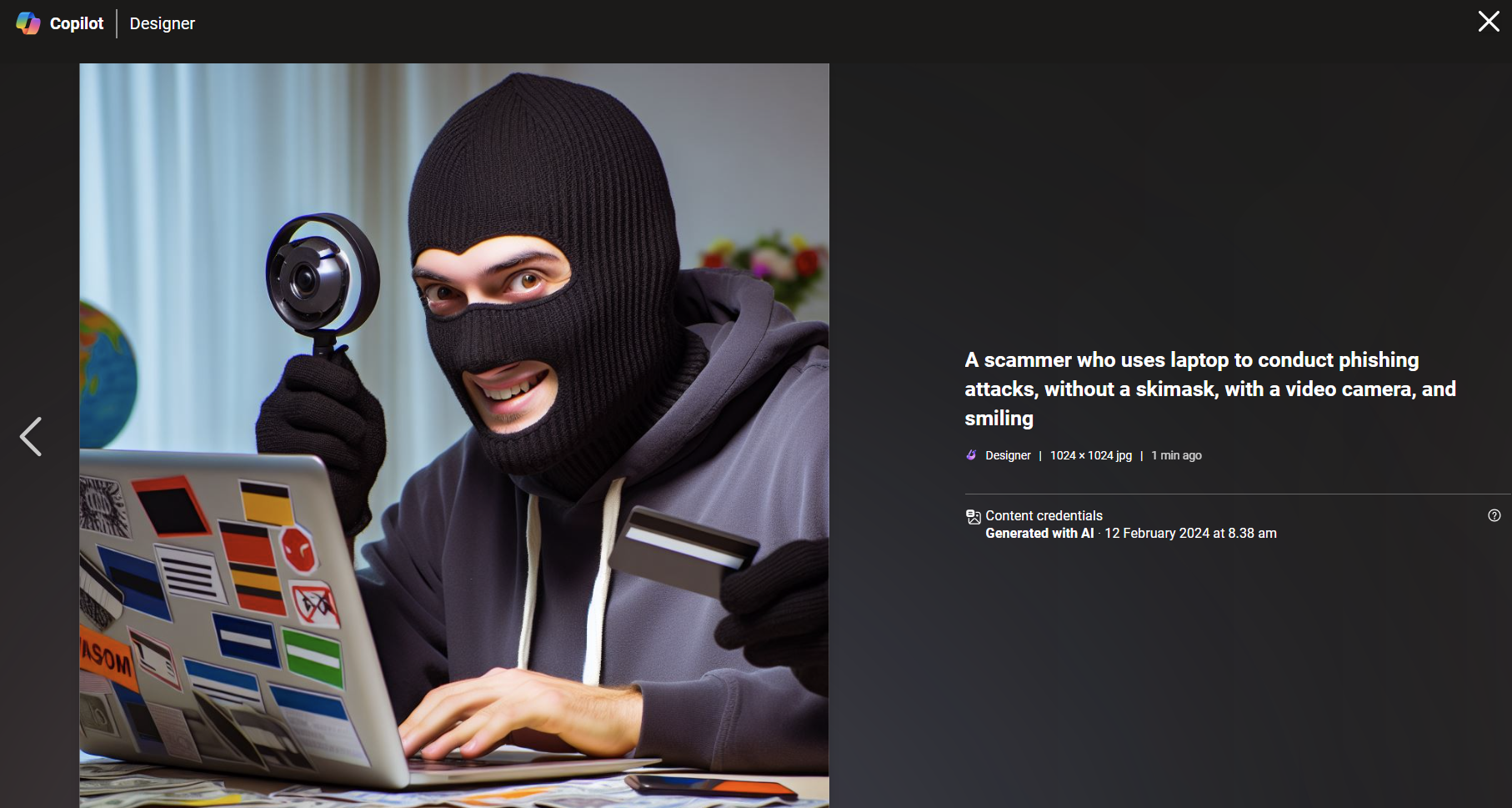

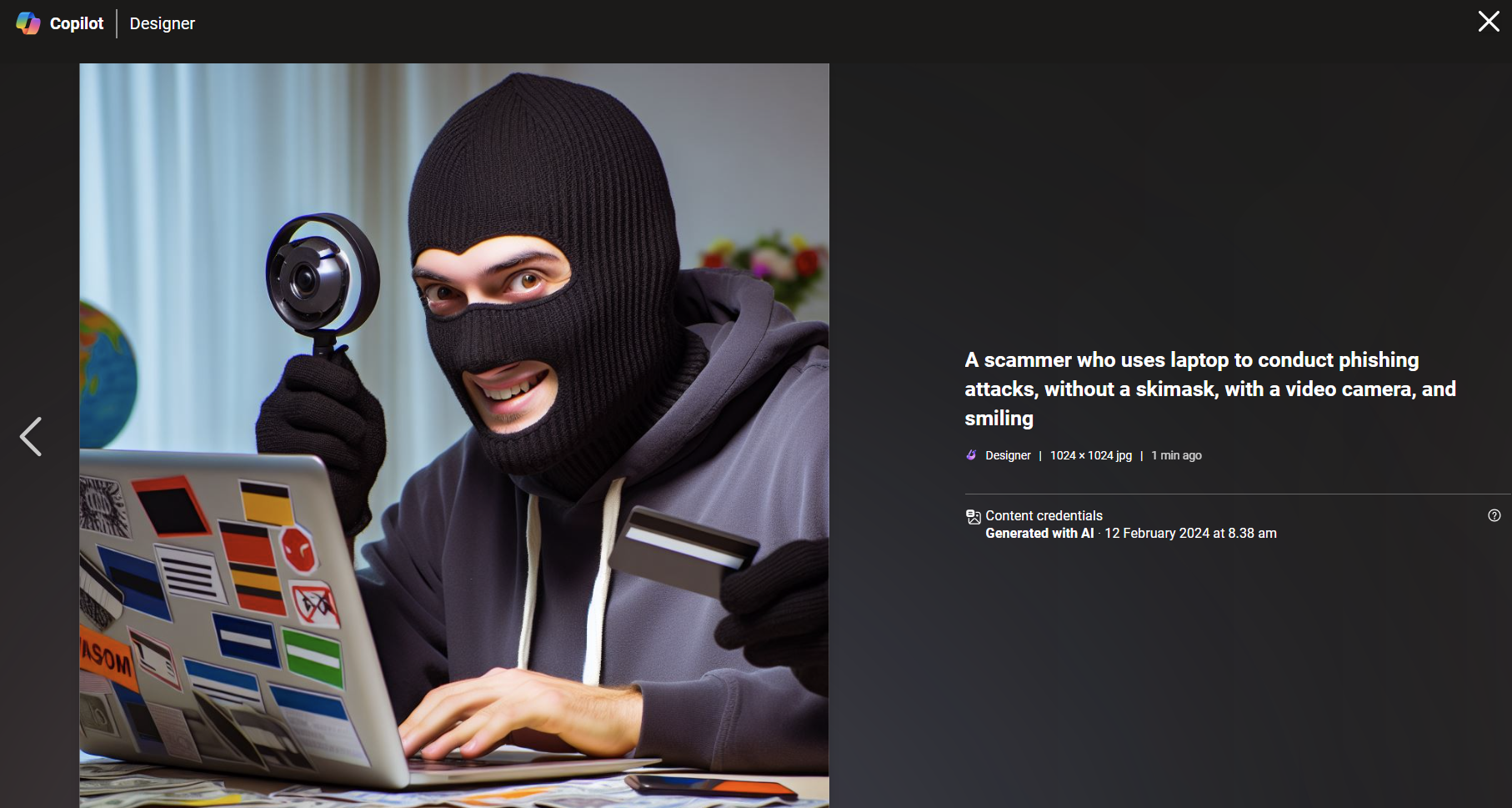

If you look at the picture above, it might look pretty good at first glance. Taking a closer look reveals multiple alarming features. Right eyelid is weird, the person has three hands, stickers on the laptop look weird, the credit card (or whatever it looks like) has no text on it, and the reflection on the mobile phone looks weird. Also, I have never seen someone use a video camera attached to magnifying glass the wrong way around in real life. Now, if you take all this and use your imagination, you can conduct questions you can ask the person in the video chat, to trick deepfake AI to start acting weirdly.

Security awareness training has already taught our users to be wary. But how can you in a video meeting be sure that the other party has started the conversation from malicious origin?

By nature, we are willing to help and trust people. That is a prerequisite for any work community to really shine. This is where social engineering hurts us bad. This directly attacks our natural tendency and willingness to trust people. However, we should not give up trusting people in order to be able to work. Instead, we need to trust but verify. Bring up the culture where it is normal to verify identities, verify intentions and use other channels to verify actions that might cost us money.

Verifying can be done in multiple ways. One common way is to have a request in another channel and then confirmation for example in an order processing system. In addition to just being able to fake voice and video, the attacker would need to have a stolen identity to be able to conduct an attack. The thing is that we should not trust solely a phone call, SMS message, email or video conferences anymore. We need to have processes for verifying the identity of the party contacting us. I have heard of some suggestions of having secret greetings in video calls or having chit chat. There has also been some suggestions on having secret emojis for verification or emergency situations. When you can’t trust a single communication channel, you need to multi-factor verifiy everything at everywhere.

Where should we then draw the line for when verification is required? You could make up rules like “in these situations you need additional verifying”. But in many organizations, there are ID badges that normal people are struggling to ask from other people in the building. This kind of social awkwardness needs to be removed from a company culture. It is better to do lots of verifying of people to get used to the process, and people need to understand why verifying is important and how it protects the company.

At Zure, we already figured out that our secret emoji could be the polar bear. I did not agree with that since it has way too much media presence in our company pictures. So here is unveiling of our secret polar bear emoji.

Multisite or remote work organizations might be more vulnerable to these kinds of attacks. In an organization where people are working next to each other and seeing face-to-face daily, it’s much harder to conduct these kinds of attacks. In a similar manner, smaller organizations might have less need for wearing badges since people know each other well. Larger organization and multisite organizations will struggle with this more. Some organizations might be able to avoid these kinds of attacks by having more autonomous working sites and more mandate locally. But organizations where it is normal to have large money transfers managed between different continents, there is need for different kinds of approaches.

Our newsletters contain stuff our crew is interested in: the articles we read, Azure news, Zure job opportunities, and so forth.

Please let us know what kind of content you are most interested about. Thank you!